精通

英语

和

开源

,

擅长

开发

与

培训

,

胸怀四海

第一信赖

锐英源精品开源心得,转载请注明:“锐英源www.wisestudy.cn,孙老师作品,电话13803810136。”需要全文内容也请联系孙老师。

A web crawler (also known as a web spider or ant) is a program, which browses the World Wide Web in a methodical, automated manner. Web crawlers are mainly used to create a copy of all the visited pages for later processing by a search engine, that will index the downloaded pages to provide fast searches. Crawlers can also be used for automating maintenance tasks on a web site, such as checking links, or validating HTML code. Also, crawlers can be used to gather specific types of information from Web pages, such as harvesting e-mail addresses (usually for spam).

一个网络爬虫(有时也称为一个web蜘蛛或ant)是一个程序,以系统化、自动化的方式的浏览万维网。 Web爬虫程序主要是用来创建一个访问页面的副本,服务于后来加工目的,让搜索引擎分析,将索引下载页面提供快速搜索。 爬虫还可用于自动化网站维护任务,如检查链接,或验证HTML代码。 同时,爬虫程序可以用来收集特定类型的信息从网页,如收集电子邮件地址(通常为垃圾邮件)。

In this article, I will introduce a simple Web crawler with a simple interface, to describe the crawling story in a simple C# program. My crawler takes the input interface of any Internet navigator to simplify the process. The user just has to input the URL to be crawled in the navigation bar, and click "Go".

在这篇文章中,我将介绍一个有一个简单的接口的简单Web爬虫软件,在一个简单的c#程序中描述爬行的过程。 我的爬虫软件需要任何网络浏览为输入接口,目的是简化处理。 用户只需要在导航栏里输入URL,然后单击“Go”。

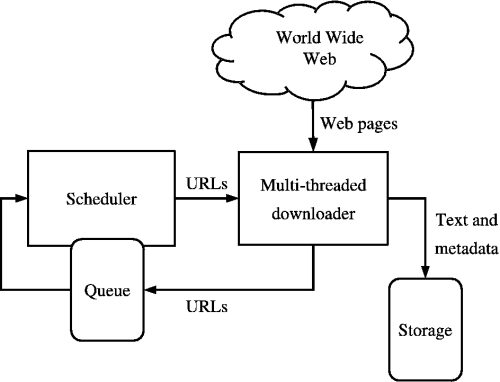

The crawler has a URL queue that is equivalent to the URL server in any large scale search engine. The crawler works with multiple threads to fetch URLs from the crawler queue. Then the retrieved pages are saved in a storage area as shown in the figure.

爬虫有一个URL队列,相当于在任何大型搜索引擎服务器的URL。 爬虫软件使用多个线程从队列爬虫获取url。 然后检索页面保存在存储区域如图。

The fetched URLs are requested from the Web using a C# Sockets library to avoid locking in any other C# libraries. The retrieved pages are parsed to extract new URL references to be put in the crawler queue, again to a certain depth defined in the Settings.

从网上获取url请求使用c#插座库来避免锁定在任何其他c#库。 检索页面解析提取新的URL引用放在爬虫软件队列,再在一定深度的定义 设置 。

In the next sections, I will describe the program views, and discuss some technical points related to the interface.在下一节中,我将描述程序的观点,并讨论一些技术点相关的接口

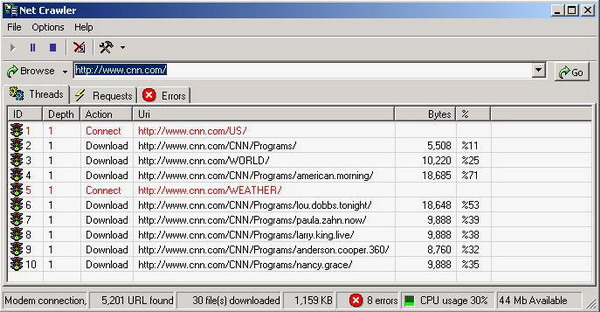

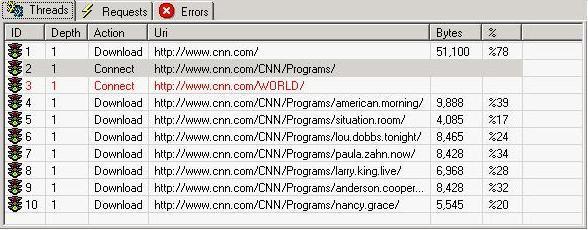

My simple crawler contains three views that can follow the crawling process, check the details, and view the crawling errors.我的简单爬虫软件包含三个视图可以爬行的过程,检查细节,并查看抓取错误。

Threads view is just a window to display all the threads' workout to the user. Each thread takes a URI from the URIs queue, and starts connection processing to download the URI object, as shown in the figure.线程视图只是一个窗口,其中显示所有用户线程的锻炼。 每个线程从队列的URI URI,并开始连接处理下载URI对象,如图。

.

.

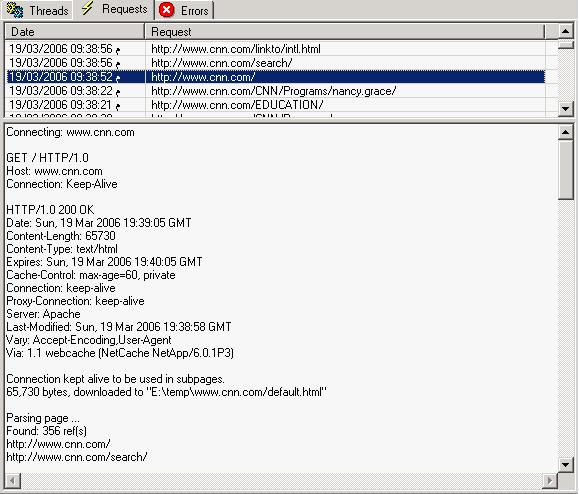

Requests view displays a list of the recent requests downloaded in the threads view, as in the following figure:

请求视图显示最近的下载请求线程的列表视图,如以下图

This view enables you to watch each request header, like: 这个视图允许您看每个请求头,如:

GET / HTTP/1.0 Host: www.cnn.com Connection: Keep-Alive

You can watch each response header, like: 你可以看到每一个响应头,如:

HTTP/1.0 200 OK Date: Sun, 19 Mar 2006 19:39:05 GMT Content-Length: 65730 Content-Type: text/html Expires: Sun, 19 Mar 2006 19:40:05 GMT Cache-Control: max-age=60, private Connection: keep-alive Proxy-Connection: keep-alive Server: Apache Last-Modified: Sun, 19 Mar 2006 19:38:58 GMT Vary: Accept-Encoding,User-Agent Via: 1.1 webcache (NetCache NetApp/6.0.1P3)

And a list of found URLs is available in the downloaded page: 和可用的发现url列表下载页面:

Parsing page ... Found: 356 ref(s) http://www.cnn.com/ http://www.cnn.com/search/ http://www.cnn.com/linkto/intl.html

Crawler settings are not complicated, they are selected options from many working crawlers in the market, including settings such as supported MIME types, download folder, number of working threads, and so on.

爬虫软件设置并不复杂,他们选择选择从许多爬虫的市场工作,包括设置,如支持MIME类型,下载文件夹,工作线程的数量,等等。

MIME types are the types that are supported to be downloaded by the crawler, and the crawler includes the default types to be used. The user can add, edit, and delete MIME types. The user can select to allow all MIME types, as in the following figure:

MIME类型是类型支持下载的爬虫,和爬虫包括默认的类型。 用户可以添加、编辑和删除的MIME类型。 用户可以选择允许所有的MIME类型,如以下图:

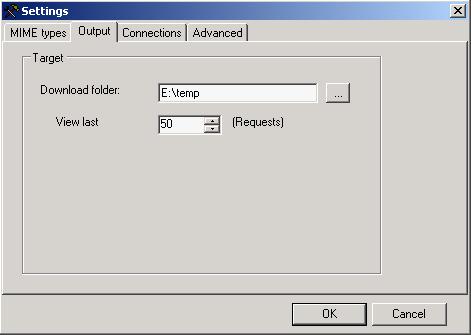

Output settings include the download folder, and the number of requests to keep in the requests view for reviewing request details.

输出设置包括下载文件夹,并请求的数量保持在请求视图检查请求的细节。

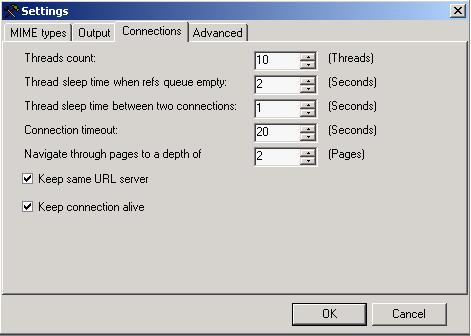

Thread count: the number of concurrent working threads in the crawler. 线程数: 在爬虫软件并发工作线程的数量。

Thread sleep time when the refs queue is empty: the time that each thread sleeps when the refs queue is empty. 线程睡眠时间当裁判队列为空: 每个线程的时间睡觉当裁判队列是空的。

Thread sleep time between two connections: the time that each thread sleeps after handling any request, which is a very important value to prevent hosts from blocking the crawler due to heavy loads. 两个连接之间的线程睡眠时间: 每个线程的时间睡在处理任何请求,这是一个非常重要的价值,以防止主机挡住了爬虫软件由于沉重的负荷。

Connection timeout: represents the send and receive timeout to all crawler sockets. 连接超时: 代表了发送和接收所有爬虫套接字超时。

Navigate through pages to a depth of: represents the depth of navigation in the crawling process. 浏览页面的深度: 代表在爬行过程中导航的深度。

Keep same URL server: to limit the crawling process to the same host of the original URL.保持相同的URL服务器: 限制爬行过程原始URL的同一主机。

Keep connection alive: to keep the socket connection opened for subsequent requests to avoid reconnection time.保持连接活着: 继续后续的请求,以避免重新连接的套接字连接打开的时间。

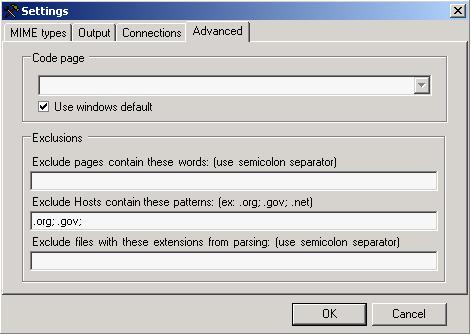

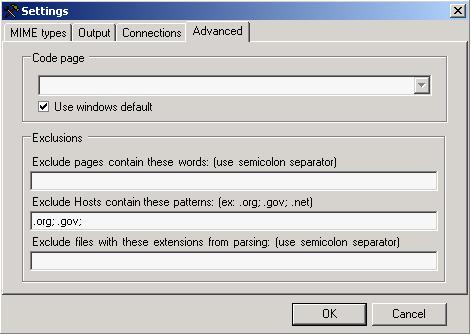

Code page to encode the downloaded text pages. 代码页编码页面下载的文本。

List of a user defined list of restricted words to enable the user to prevent any bad pages.定义的一个用户列表的列表限制的话,让用户防止不好的页面。

List of a user defined list of restricted host extensions to avoid blocking by these hosts. 定义的一个用户列表的列表限制主机扩展到这些主机避免阻塞。

List of a user defined list of restricted file extensions to avoid parsing non-text data. 定义的一个用户列表的列表限制文件扩展名来避免解析非文本数据

Keep-Alive is a request form the client to the server to keep the connection open after the response is finished for subsequent requests. That can be done by adding an HTTP header in the request to the server, as in the following request:

点火电极是一种请求客户机向服务器保持连接打开后完成后续请求的响应。 这可以通过添加一个HTTP头请求到服务器,如以下请求:

GET /CNN/Programs/nancy.grace/ HTTP/1.0 Host: www.cnn.com Connection: Keep-Alive

The "Connection: Keep-Alive" tells the server to not close the connection, but the server has the option to keep it opened or close it, but it should reply to the client socket regarding its decision. So the server can keep telling the client that it will keep it open, by including "Connection: Keep-Alive" in its reply, as follows:

“连接:维生”告诉服务器不关闭连接,但服务器可以选择打开或关闭它,但它应该回复客户端套接字的关于其决定。 所以服务器可以告诉客户端,它将保持开放,包括“连接:维生”在其回复,如下:

HTTP/1.0 200 OK Date: Sun, 19 Mar 2006 19:38:15 GMT Content-Length: 29025 Content-Type: text/html Expires: Sun, 19 Mar 2006 19:39:15 GMT Cache-Control: max-age=60, private Connection: keep-alive Proxy-Connection: keep-alive Server: Apache Vary: Accept-Encoding,User-Agent Last-Modified: Sun, 19 Mar 2006 19:38:15 GMT Via: 1.1 webcache (NetCache NetApp/6.0.1P3)

Or it can tell the client that it refuses, as follows:也可以告诉客户拒绝,如下:

HTTP/1.0 200 OK Date: Sun, 19 Mar 2006 19:38:15 GMT Content-Length: 29025 Content-Type: text/html Expires: Sun, 19 Mar 2006 19:39:15 GMT Cache-Control: max-age=60, private Connection: Close Server: Apache Vary: Accept-Encoding,User-Agent Last-Modified: Sun, 19 Mar 2006 19:38:15 GMT Via: 1.1 webcache (NetCache NetApp/6.0.1P3)

WebRequest and WebResponse problems: WebRequest和WebResponse问题:

When I started this article code, I was using the WebRequest class and WebResponse, like in the following code:

当我开始本文的代码,我使用 WebRequest 类和 WebResponse ,就像下面的代码:

WebRequest request = WebRequest.Create(uri);

WebResponse response = request.GetResponse();

Stream streamIn = response.GetResponseStream();

BinaryReader reader = new BinaryReader(streamIn, TextEncoding);

byte[] RecvBuffer = new byte[10240];

int nBytes, nTotalBytes = 0;

while((nBytes = reader.Read(RecvBuffer, 0, 10240)) > 0)

{

nTotalBytes += nBytes;

...

}

reader.Close();

streamIn.Close();

response.Close();

This code works well but it has a very serious problem as the WebRequest class function GetResponse locks the access to all other processes, the WebRequest tells the retrieved response as closed, as in the last line in the previous code. So I noticed that always only one thread is downloading while others are waiting to GetResponse. To solve this serious problem, I implemented my two classes, MyWebRequest and MyWebResponse.

这段代码运行良好但它有一个非常严重的问题 WebRequest 类函数 GetResponse 锁访问所有其他进程, WebRequest 告诉检索响应关闭,如在前面的最后一行代码。 所以我注意到,总是只有一个线程是当别人正在等待下载 GetResponse 。 为了解决这个严重的问题,我实现了我的两个类, MyWebRequest 和 MyWebResponse 。

MyWebRequest and MyWebResponse use the Socket class to manage connections, and they are similar to WebRequest and WebResponse, but they support concurrent responses at the same time. In addition, MyWebRequest supports a built-in flag, KeepAlive, to support Keep-Alive connections.

MyWebRequest 和 MyWebResponse 使用 套接字 类来管理连接,他们是相似的 WebRequest 和 WebResponse ,但他们同时支持并发响应。 此外, MyWebRequest 支持一个内置的国旗, KeepAlive 支持点火电极连接。

So, my new code would be like:所以,我的新代码就像:

request = MyWebRequest.Create(uri, request/*to Keep-Alive*/, KeepAlive);

MyWebResponse response = request.GetResponse();

byte[] RecvBuffer = new byte[10240];

int nBytes, nTotalBytes = 0;

while((nBytes = response.socket.Receive(RecvBuffer, 0,

10240, SocketFlags.None)) > 0)

{

nTotalBytes += nBytes;

...

if(response.KeepAlive && nTotalBytes >= response.ContentLength

&& response.ContentLength > 0)

break;

}

if(response.KeepAlive == false)

response.Close();

Just replace the GetResponseStream with a direct access to the socket member of the MyWebResponse class. To do that, I did a simple trick to make the socket next read, to start, after the reply header, by reading one byte at a time to tell header completion, as in the following code:

只是替换 GetResponseStream 直接访问 套接字 的成员 MyWebResponse 类。 为此,我做了一个简单的技巧使套接字阅读,首先,应答头后,通过读取一个字节告诉头完成,如以下代码:

/* reading response header */

Header = "";

byte[] bytes = new byte[10];

while(socket.Receive(bytes, 0, 1, SocketFlags.None) > 0)

{

Header += Encoding.ASCII.GetString(bytes, 0, 1);

if(bytes[0] == '\n' && Header.EndsWith("\r\n\r\n"))

break;

}

So, the user of the MyResponse class will just continue receiving from the first position of the page.

所以,用户的 MyResponse 类将继续接收从第一页的位置。

Thread management:线程管理:

The number of threads in the crawler is user defined through the settings. Its default value is 10 threads, but it can be changed from the Settings tab, Connections. The crawler code handles this change using the property ThreadCount, as in the following code:

爬虫软件的线程的数量是通过设置用户定义的。 它的默认值是10的线程,但它可以改变从Settings选项卡, 连接 。 爬虫软件的代码使用财产处理这种变化 ThreadCount的 ,就像下面的代码:

// number of running threads private int nThreadCount;

private int ThreadCount

{

get { return nThreadCount; }

set

{

Monitor.Enter(this.listViewThreads);

try

{

for(int nIndex = 0; nIndex < value; nIndex ++)

{

// check if thread not created or not suspended

if(threadsRun[nIndex] == null ||

threadsRun[nIndex].ThreadState != ThreadState.Suspended)

{

// create new thread

threadsRun[nIndex] = new Thread(new

ThreadStart(ThreadRunFunction));

// set thread name equal to its index

threadsRun[nIndex].Name = nIndex.ToString();

// start thread working function

threadsRun[nIndex].Start();

// check if thread dosn't added to the view

if(nIndex == this.listViewThreads.Items.Count)

{

// add a new line in the view for the new thread

ListViewItem item =

this.listViewThreads.Items.Add(

(nIndex+1).ToString(), 0);

string[] subItems = { "", "", "", "0", "0%" };

item.SubItems.AddRange(subItems);

}

}

// check if the thread is suspended

else if(threadsRun[nIndex].ThreadState ==

ThreadState.Suspended)

{

// get thread item from the list

ListViewItem item = this.listViewThreads.Items[nIndex];

item.ImageIndex = 1;

item.SubItems[2].Text = "Resume";

// resume the thread

threadsRun[nIndex].Resume();

}

}

// change thread value

}

catch(Exception)

{

}

Monitor.Exit(this.listViewThreads);

}

}

If TheadCode is increased by the user, the code creates a new thread or suspends suspended threads. Else, the system leaves the process of suspending extra working threads to threads themselves, as follows. Each working thread has a name equal to its index in the thread array. If the thread name value is greater than ThreadCount, it continues its job and goes for the suspension mode.

如果 TheadCode 增加了用户,代码创建一个新线程或暂停暂停线程。 其他系统的叶子暂停额外的工作线程,线程的过程本身,如下。 每个工作线程都有一个名称等于线程的索引数组。 如果线程名称值大于 ThreadCount的 继续工作,暂停模式。

Crawling depth:爬行深度:

It is the depth that the crawler goes in the navigation process. Each URL has an initial depth equal to its parent's depth plus one, with a depth 0 for the first URL inserted by the user. The fetched URL from any page is inserted at the end of the URL queue, which means a "first in first out" operation. And all the threads can be inserted in to the queue at any time, as shown in the following code:

爬虫软件在导航过程中有进入深度概念。 每个URL有初始深度,它等于其父母的深度+ 1,用户第一个访问的URL深度为0。 从页面获取URL会加入到URL队列的末尾,这意味着一个“先进先出”操作。 所有的线程可以随时插入到队列,如以下代码所示:

void EnqueueUri(MyUri uri)

{

Monitor.Enter(queueURLS);

try

{

queueURLS.Enqueue(uri);

}

catch(Exception)

{

}

Monitor.Exit(queueURLS);

}

And each thread can retrieve the first URL in the queue to request it, as shown in the following code:

每个线程可以检索请求队列中第一个URL,如以下代码所示:

MyUri DequeueUri()

{

Monitor.Enter(queueURLS);

MyUri uri = null;

try

{

uri = (MyUri)queueURLS.Dequeue();

}

catch(Exception)

{

}

Monitor.Exit(queueURLS)

return uri;

}